DFL Newsletter Issue #24: DFL's Practice Playbook on Responsible AI & Partnerships & Research on RAI for the Majority World

From the launch of our Practice Playbook on Responsible AI to new partnerships advancing Responsible AI in the Majority World, and global conversations on data governance and mobility futures, this past month, Digital Futures Lab has been at the heart of critical efforts to champion fair, just, and accountable AI for all. Let’s dive in!

research 📑

We are thrilled to announce the launch of DFL’s Practice Playbook on Responsible AI - a guide for designing, developing, and deploying Responsible AI interventions for social impact organisations. Building on insights and best practices from our Responsible AI Fellowship, the Playbook is a practical, field-informed guide grounded in real-world challenges and established scholarship on Responsible AI. It also includes concrete examples of real-world implementation and a curated set of tools and resources recommended for AI developers.

Urvashi and Aarushi co-authored a paper titled "Mapping the Potentials and Limitations of Using Generative AI Technologies to Address Socioeconomic Challenges in Low- to Middle-Income Countries (LMICS)," which was published as a Nature preprint. Written under the ambit of the Gates Foundation’s Grand Challenges initiative, the paper acknowledges the potential for Generative AI to propel progress, while highlighting the risks to the individuals and societies that these technologies seek to benefit, and the significant barriers that need to be addressed to ensure equitable and inclusive AI development and use.

Urvashi and Aarushi also co-authored a paper on how gender bias manifests in LLMs when deployed in critical social sectors like agriculture and healthcare in India with Cornell University’s Dr. Aditya Vashistha. The paper has been accepted at ACM Conference on Fairness, Accountability, and Transparency (ACM FAccT) 2025. The paper explores how LLMs trained and deployed without gender-intentional design often replicate and reinforce patriarchal norms. The full text of the paper will be out soon!

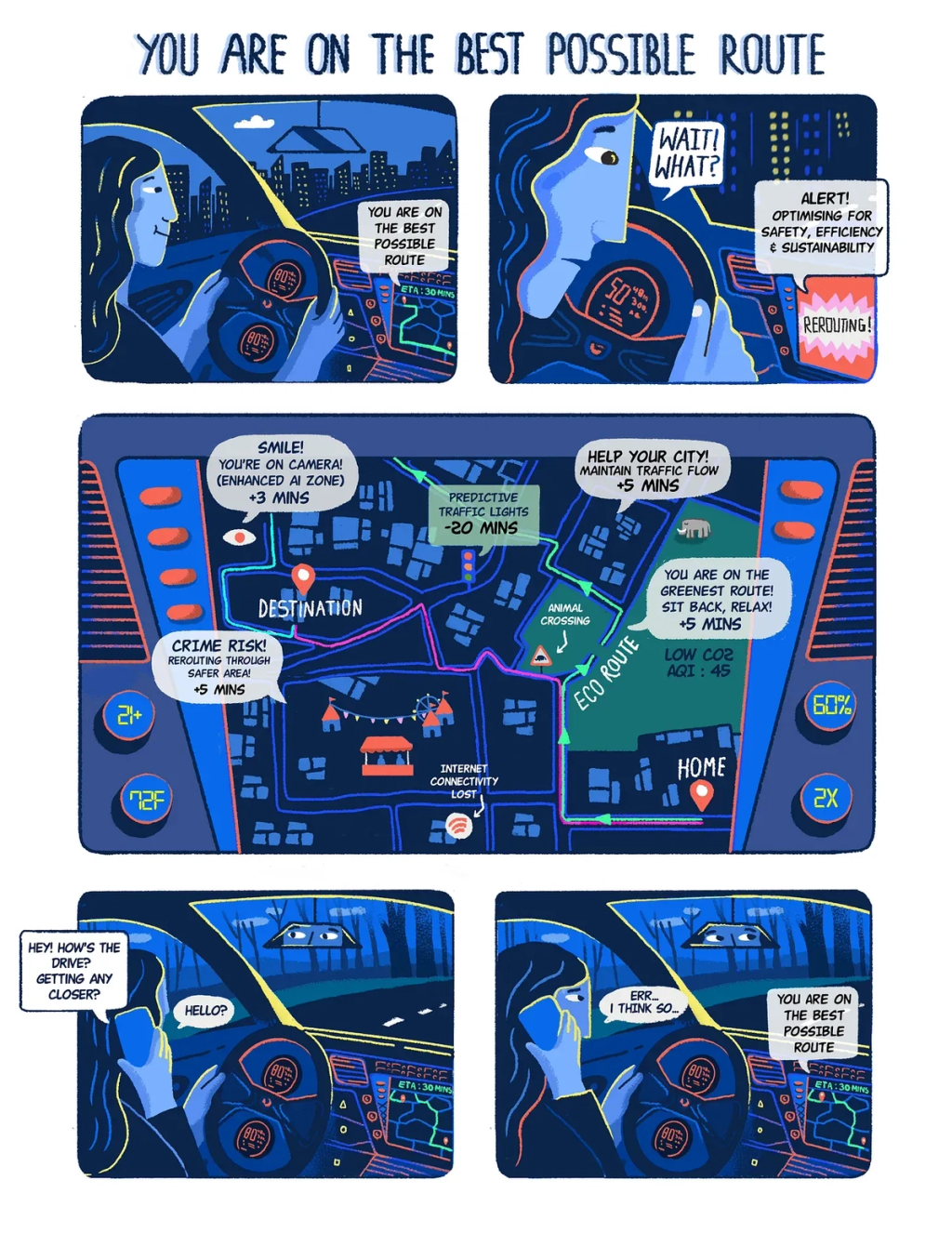

Issue 07 of the Code Green newsletter and episode 07 of the podcast are out, and this time, we’re taking things on the road, sort of. The latest editions of the Code Green newsletter and podcast talk about AI’s role in Asia’s mobility futures. We examine the goals being optimised: whether the pursuit of efficiency in the sector leads to sustainable outcomes, and how access and connectivity are incorporated into AI policies for mobility.

events 🎤

DFL hosted a virtual launch event to release the Practice Playbook on Responsible AI publicly. The launch event was kicked off with a presentation of the playbook by Sasha John, Research Associate and Project Co-ordinator of the Responsible AI Fellowship, followed by a panel discussion moderated by Aarushi. She was joined by the following experts:

- Eunsong Kim, Chief of Sector for Social & Human Sciences, UNESCO

- Alpan Raval, Chief Scientist, AI/ML, Wadhwani AI

- Dr Namita Singh, Director of User Experience, Digital Green

- Hanna Minaye, Associate Manager, Research, mDoc

- Vinod Rajasekaran, Head Fractional CxO Program, Project Tech4Dev

Watch the webinar below!

Aarushi attended UNICEF Innocenti’s Data Governance Workshop in Florence, part of their global effort to advance child-centred data governance. She delivered a lightning talk on India’s edtech landscape, highlighting tensions between formal governance norms and extractive business models. As a follow-up, DFL will serve as the Asia region convener of an online consultation on Edtech and Data Governance in Asia, in collaboration with Tech Legality and UNICEF Innocenti!

Sr. Research Associate Dona Mathew joined a panel on 'Challenges and Opportunities of ML for Climate Action in Asia,' organised by Climate Change AI at the International Conference for Learning Representations in Singapore. Moderated by Kin Ho Poon, with co-panelists James Askew and Karen Wang, Dona spoke on the ethical risks of applying ML in climate strategies given intra-regional disparities in policy frameworks, climate data and compute resources. Watch the panel here.

media ✍🏽

- The Code Green podcast episode on AI supply chains was featured in Data & Society’s latest newsletter issue.

- Research Associate Anushka Jain was quoted in a TechCrunch article on the use of AI bots for fact-checking. Speaking on the likelihood of AI bots spreading misinformation further, Anushka commented that they could fabricate information to respond to an end-user.

- DFL’s research on mitigating gender bias in Indian language LLMs was featured on Cornell University’s Global AI Initiative’s research page.

partnerships 🤝🏾

DFL is officially a partner for the Pulitzer Center’s South to South AI Accountability CoLab, a program that aims to strengthen the connection between AI accountability reporting and civil society engagement, contributing to a more informed governance of AI in the Global South.

We also recently joined the Coalition for Responsible Evolution of AI (CoRE-AI) as a member. CoRE-AI brings together various stakeholders within the AI ecosystem to drive the discourse on the responsible evolution of AI. The coalition comprises startups, civil society organisations, think tanks, academic institutions, researchers, experts, and industry representatives – all working together to support the responsible integration of AI in our society.

what we’re reading 📖

- Anushka: 📕 Why India fell behind China in tech innovation by Rest of World

- Shivranjana: 📕 Is An Anti-Fascist Approach to Artificial Intelligence Possible? by Tech Policy Press

- Harleen: 📕 The Phony Comforts of AI Optimism by Edward Zitron

April Spotlight: Responsible AI in Practice 🔦

Responsible AI (RAI) is, broadly, a set of principles intended to bridge the gap between high-level ethical theory and practical, real-world AI deployment. It is premised on principles such as transparency, safety, and fairness. However, today, RAI risks getting diluted. Popularised by the AI industry itself, the vocabulary of RAI has been increasingly co-opted by Big Tech to ethics-wash AI products, policies, and innovation agendas.

At Digital Futures Lab, we see RAI differently: as a contextual and action-driven practice. A central question in actioning Responsible AI is: Is AI even the right solution for this problem?

Key Learnings from Our Work

Through our ongoing work and in-depth conversations with practitioners and researchers, we surface key insights that reveal both the possibilities and pitfalls of Responsible AI today:

1. Responsible AI begins by questioning whether AI is needed at all.

Not every problem needs an AI solution. AI-first approaches often worsen access, sustainability, or care outcomes, especially when driven by funding trends or scale pressures. Responsible practice starts by asking: Is AI truly the right solution to a problem? Sometimes, the most responsible outcome after scoping is recognising that a low-tech or non-AI solution is more equitable or that an AI intervention, while technically possible, would deepen existing harms.

2. Principles aren't enough, responsibility in AI needs clear, operational pathways.

Without concrete steps, principles like fairness and transparency risk becoming checkbox exercises or industry grandstanding. As Goodhart’s Law goes: once a principle becomes a compliance target, it loses meaning. RAI must be incorporated across the AI lifecycle — from procurement systems, documentation practices, and user testing — significantly expanding it beyond the constraints of mere policy statements.

3. Big tech's embrace of RAI has helped popularise it, but risks ethics washing.

Terms like “trustworthy AI” are often used without enforceable commitments. Big Tech embraces a language of responsibility to self-regulate and manage reputation, rather than driving genuine accountability. With no external checks, companies define and audit their own ethics, creating a conflict of interest that turns responsibility into branding and sidelines the structural changes real accountability demands.

4. Responsibility without infrastructure is responsibility without power.

Social impact organisations often want to act responsibly but face barriers: limited AI literacy, short funding cycles, and unclear regulations. Good intentions alone are not enough. Without time, resources, and policy support to design and adapt responsibly, RAI remains aspirational rather than actionable.

5. Responsible AI must be user-centred and context-grounded, not abstract.

Designers must consider purpose, data, interface, and unintended effects. Building AI responsibly means centring communities, positionalities, and downstream effects throughout design and evaluation, rather than treating context as an afterthought.

6. Responsible AI must tackle climate, labour, and systemic harms.

Responsible AI should account not just for bias or safety, but also for the environmental footprint of AI systems, the rights and well-being of gig workers, the impact on labour markets, and the normalisation of surveillance. Advocating for RAI means advocating for AI that respects all stakeholders and considers the full spectrum of ecological and social costs.

7. Voluntary principles won't hold, regulation must create real accountability.

In India and globally, voluntary ethics have shown their limits. Context-aware, nuanced regulation that allows room for innovation, seeks to serve people and the planet, and not just companies’ bottom lines, is crucial. Otherwise, the burden of ethics unfairly falls on users and practitioners, while powerful actors evade scrutiny.

For more such insights, take a look at some of our flagship projects on Responsible AI:

- The Practice Playbook for Responsible AI: Designed to serve as a resource, offering organisations detailed, step-by-step recommendations for designing and deploying AI interventions for social impact.

- Provocations on AI Sovereignty: Developed from reflections on the matter with 18 of India’s leading AI thinkers, this report explores what digital sovereignty means, assesses its feasibility, examines who benefits from such an agenda, and unpacks the new policy conundrums it raises.

- Mapping the Potentials and Limitations of Using Generative AI Technologies to Address Socio-Economic Challenges in LMICs: Written as part of our project, AI Ethics in the Global South: Capacity-strengthening and Learning, the article explores key insights, real-world use cases, and what it will take to make GenAI work responsibly.

- Responsible AI Fellowship: India’s first capacity-strengthening programme on Responsible AI, mentoring social impact organisations on integrating responsible AI principles.

- AI + Climate Futures in Asia and Code Green: Cutting through the hype around AI & Climate Action in Asia, surfacing the latest scientific research & expert insights.

- From Code to Consequence: Interrogating Gender Biases in LLMs in India: Research study on gender bias in Indian LLMs, covering the entire lifecycle from scoping to deployment.

- Future of Work: Unpacking the impact of rapid GenAI adoption on India’s future of work to identify key levers of change and policy pathways towards inclusive labour futures.